This is the fourth part in a series of blog posts (read

part 3 and

part 5) giving some practical examples of lambdas, how functional programming in Java could look like and how lambdas could affect some of the well known libraries in Java land. This part describes shortly the changes of a new proposal, that has been published while writing this series, and how it changes some of the examples in this series.

The new proposal

Last week Brian Goetz published a

new proposal to add lambda expressions to Java, that builds on the previous straw-man proposal. The two biggest changes are that function types have been removed and that there will be more type inferencing than before. Also the new proposal uses a different syntax for lambda expressions, but it also states, that this doesn't reflect a final decision on the syntax. But let's have a short look at each of these points.

No more function types

At first, this sounds irritating. Without function types, how could we pass functions to other methods or functions? We need to declare them as parameters somehow. The answer is that functions or better lambda expressions have to be converted to SAM types. Remember that SAM types are single abstract method types, like interfaces with only one method or abstract classes with only one abstract method, and that lambda expressions can automatically be converted to such types. This is called SAM conversion, which I've also discussed in the first part of this series.

So, function types will be primarily replaced by SAM conversion, lambda expressions can only appear in places where they can be converted to a SAM type. I don't fully understand the reasons behind this decision, but in a way it actually makes sense. As shortly mentioned in the first part of this series, from an API designer's perspective you don't have to scratch your head anymore if your methods should take a function type or a SAM type as an argument. And SAM types would probably turn out to be more flexible, anyway.

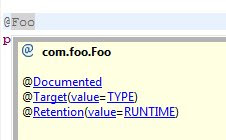

However, this will change almost all of the examples in this series. In all places, where a method took a function as an argument, the type of this argument must be changed to a SAM type, and I've almost everywhere used function types. We'll have a look at this later, and see if it makes sense now to have some generic function interfaces for functions like

Function<X,Y>.

More type inferencing

As we've also seen in the examples in this series, lambda expressions can be quite verbose, because of type declarations. The new proposal says, that basically all types in lambda expressions can be inferred by the compiler - their return types, the parameter types and the exception types. This will make lambdas much more readable.

Alternative syntax

As said, the syntax of the new proposal does not reflect a final decision, but it is much more like in Scala or Groovy in this proposal.

FileFilter ff = {file ->

file.getName().endsWith(".java") };

Although I personally like this arrow syntax much better, I'll stick with the #-syntax in this series because the prototype uses it.

More changes

Just for completeness, the proposal contains some more changes.

this in lambdas has different semantics than before,

break and

continue aren't allowed in lambdas, and instead of

return,

yield will be used to return values from a lambda expression (there are no details on how yield exactly works). Also, there will be method references, that allow for referencing methods of an existing class or object instance.

Impact on the examples

Now, let's have a look at how the new proposal changes some examples of the previous posts. The file filtering example from part 1 looked like this:

public static File[] fileFilter(File dir, #boolean(File) matcher) {

List<File> files = new ArrayList<File>();

for (File f : dir.listFiles()) {

if (matcher.(f)) files.add(f);

}

return files.toArray(new File[files.size()]);

}

The client code looked like this:

File[] files = fileFilter(dir, #(File f)(f.getName().endsWith(".java")));

As there will be no function types anymore, we will have to change the

fileFilter, so that it takes a SAM type instead of a function type. In this case it's simple, we could simply change the second argument's type to

FileFilter and call its

accept() method:

public static File[] fileFilter(File dir, FileFilter matcher) {

List<File> files = new ArrayList<File>();

for (File f : dir.listFiles()) {

if (matcher.accept(f)) files.add(f);

}

return files.toArray(new File[files.size()]);

}

The client side is still the same, though.

Now, as you might have noticed (or remembered from part 2) this is quite a bad example, because there actually is already the

File.listFiles(FileFilter) method we can also call with a lambda:

File[] files = dir.listFiles(#(File f)(f.getName().endsWith(".java")));The lambda will be converted to a

FileFilter automatically in this case. But despite the

fileFilter method is a bad example, it shows quite well that the removal of function types has very little impact, if there is already a corresponding SAM type.

Before we go further, here's the client side with the arrow syntax and type inference:

File[] files = fileFilter(dir, f -> {

f.getName().endsWith(".java") });

Now, let's see how the removal of function types changes the examples in the abscence of an appropriate SAM type. For that here is the

List<T>.map function from part 3 again:

public <S> List<S> map(#S(T) f) {

List<S> result = new ArrayList<S>();

for (T e : this) {

result.add(f.(e));

}

return result;

}

We need to replace its function type argument

f by a SAM type. We could start by writing an interface

Mapper with a single method

map. This would be an interface especially for usage with the

map function. But having in mind, that there are probably many more functions similar to our

map function, we could create a more generic

Function interface, or more specifically an interface

Function1<X1,Y> which represents a function that takes exactly one argument of type

X1 and returns a value of type

Y.

public interface Function1<X1,Y> {

Y apply(X1 x1);

}

With this we could change our map function taking a

Function1 instead of a function type argument:

public <S> List<S> map(Function1<T,S> f) {

List<S> result = new ArrayList<S>();

for (T e : this) {

result.add(f.apply(e));

}

return result;

}

Again, the client side would still be the same. The lambda expression will be converted to a

Function1.

List<Integer> list = new List<Integer>(2,4,2,5);

List<String> result = list.map1(n -> {"Hello World".substring(n)});

The other examples are basically the same, so I think this is enough to show the changes that the new proposal brings by removing function types.

Finally let's have a short look at the new syntax and the stronger type inferencing. With the initial proposal a

File.eachLine method, could be called like this (see also part 2).

file.eachLine(#(String line) {

System.out.println(line);

});

This would look more like this with the new proposal's syntax:

file.eachLine(line -> {

System.out.println(line);

});

And with some more syntactic sugar, e.g. if it was allowed to remove parentheses, leave out a lambdas argument if it just takes a single argument and call it

it in this case, then it would look even more like a groovy control structure:

file.eachLine {

System.out.println(it);

};

But this is not part of the any proposal, yet.

To summarize, the removal of function types does not have such a huge impact on the examples, and in a way it removes complexity and feels more Java-like. To me it would be quite reasonable to have generic function SAM types in the standard libraries, e.g.

Function0 for a function that takes no arguments,

Function1 for a function that takes one argument and so on (up to

Function23, then Java would have more than Scala, yay!). Further complexity is removed by the stronger type inferencing capabilities.